Enterprise AI Strategy

•06 min read

Agentic AI systems, with their remarkable ability to autonomously reason, plan, and execute tasks, are rapidly transitioning from experimental concepts to practical enterprise applications. However, the true operational power of these intelligent agents is only realized when they can effectively and efficiently interact with a vast landscape of enterprise tools, data sources, and APIs. This critical step—enabling agents to access and utilize the contextual information and functionalities they need—presents a significant integration hurdle. Custom-coding these connections for each agent and system is often complex, time-consuming, and hinders scalable deployment.

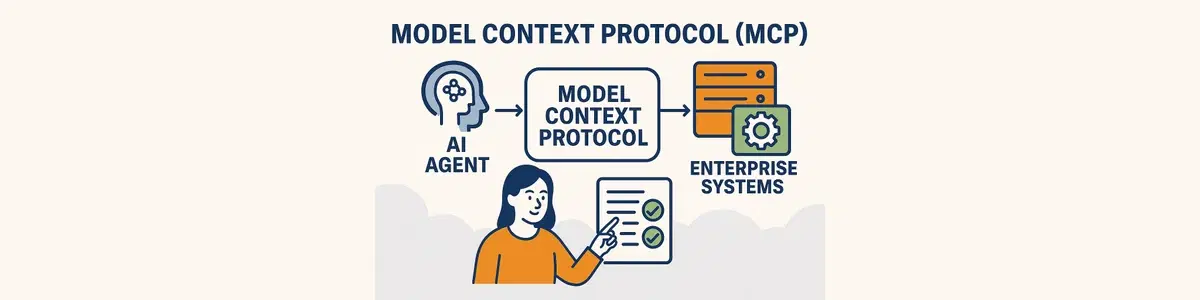

To directly address this central challenge in harnessing Agentic AI, the Model Context Protocol (MCP) is emerging as a pivotal technical standard. MCP aims to provide a standardized communication layer, fundamentally simplifying how these intelligent agents connect with the diverse and distributed systems they rely upon. This article will explain what MCP is, detail how it works to solve these agent-specific integration issues, explore its components, and then illustrate its practical application with a detailed example from the retail sector.

Modern AI agents derive their power from their ability to interact with their environment. This means accessing data from various databases, utilizing specialized software tools, invoking APIs, and processing information from different enterprise applications. For instance, an AI agent in finance might need to pull data from market feeds, risk assessment tools, and regulatory databases. In healthcare, an agent could assist by interfacing with patient record systems, medical imaging tools, and research portals.

Without a standardized approach, integrating AI agents with these systems typically involves:

Bespoke, Point-to-Point Integrations: Developers must write custom code for each connection an AI agent makes to a specific tool or data source

Brittleness and Maintenance Overheads: These custom integrations are often fragile. If an underlying system's API changes, the integration breaks, requiring rework. Managing dozens or hundreds of such connections becomes a significant maintenance burden.

Scalability Issues: As the number of AI agents and the variety of tools and data sources grow, this custom approach doesn't scale efficiently, slowing down innovation.

High Development Costs & Slow Deployment: The time and resources required for these integrations delay the deployment of valuable AI solutions.

These challenges are not unique to any single industry; they are common pain points for any enterprise looking to deploy AI agents at scale.

The Model Context Protocol (MCP) is an open standard that provides a universal communication method for AI applications (like Large Language Models or autonomous AI agents) to interact with external tools, data services, and other enterprise systems.

Think of MCP as a "universal adapter" or "USB-C port for AI." Just as USB-C offers a consistent physical connector and protocol for various hardware devices, MCP aims to provide a universal software interface. Instead of needing a different "plug" (custom code) for every tool or data source an AI agent needs to use, MCP offers one standardized connection method.

The core aim of MCP is to enable AI applications to seamlessly and securely discover and utilize any necessary service or data source through this common, simplified interface, regardless of the underlying technology of those services.

MCP standardizes these interactions through a defined architecture and a clear communication flow:

MCP Host: This is the AI application itself that needs to access external context or tools (e.g., an enterprise chatbot, an AI-powered analytics tool, an autonomous workflow agent). The Host orchestrates the overall task.

MCP Client: Software residing within the MCP Host. Its main role is to act as an intermediary. It takes the Host's need for a tool or data, formats it into a standardized MCP request, sends it to the appropriate MCP Server, receives the MCP response, and then parses it back into a format the Host AI can understand.

MCP Server: A program or service that "wraps" an existing tool, data source, or capability, exposing it through the standardized MCP interface. For example, an enterprise's legacy database could have an MCP Server built around it, allowing AI agents to query it using MCP without needing to understand its native query language directly. MCP Servers advertise their capabilities so Hosts/Clients can discover and use them.

The MCP Host (AI application) identifies a need for an external tool or data.

The MCP Client (within the Host) formulates a standardized request and sends it to the relevant MCP Server.

The MCP Server receives the request and processes it by interacting with its underlying tool or data source (e.g., executes a function, retrieves data).

The Server returns a standardized MCP response (containing the data or tool output) back to the Client.

The Client provides this structured information to the Host AI, enabling it to proceed with its task.

MCP often defines common interaction types, such as:

Tools: Actions an AI agent can request an MCP Server to perform (e.g., query_database, generate_report, send_notification).

Resources: Structured data an MCP Server can provide to an AI agent (e.g., customer records, product specifications, financial data).

The critical aspect is standardization. By defining a common structure for how tools are described, invoked, and how data is exchanged, MCP allows any MCP-compliant Host to interact with any MCP-compliant Server.

To see how MCP works in a practical scenario, let's consider "RetailCorp," a large e-commerce business. RetailCorp, like many businesses, wants to leverage AI for better customer service and more efficient internal operations.

AI Customer Service Chatbot: Needs to access product information (from a PIM system), order status (from an OMS), customer history (from a CRM), and promotion details.

AI Inventory Assistant (Internal): Needs to access live inventory data, sales history, supplier information, and marketing plans to help predict stock needs.

Without MCP, connecting these AI agents to RetailCorp's varied backend systems would require extensive custom integration for each.

RetailCorp implements MCP Servers for its key systems: an "Inventory MCP Server," a "Product Catalog MCP Server," a "Customer Order MCP Server," etc. Their AI Chatbot and AI Inventory Assistant act as MCP Hosts, with built-in MCP Clients.

Customer Chatbot (External Use Case):

A customer asks the Chatbot (MCP Host): "What's the status of my order #ORD123?"

The Chatbot's MCP Client sends a standard MCP request like ToolCall: GetOrderStatus, OrderID: "ORD123" to RetailCorp's "Customer Order MCP Server."

This MCP Server queries RetailCorp's actual Order Management System and gets the status.

The MCP Server returns a standard MCP response (e.g., "Status: Shipped, Tracking: ABC987") to the Chatbot.

The Chatbot informs the customer.

MCP Advantage for RetailCorp: The chatbot interacts via MCP, not the OMS's specific API. If RetailCorp changes its OMS, only the "Customer Order MCP Server" needs updating, not the chatbot's core logic.

AI Inventory Assistant (Internal Use Case)

An inventory manager asks the Assistant (MCP Host): "Which summer apparel items risk stocking out next month?"

The Assistant's MCP Client sends multiple MCP requests to various MCP Servers (Sales Data MCP Server, Inventory MCP Server, Promotions MCP Server).

These servers retrieve the necessary data from their respective backend systems.

The Assistant receives all data via standardized MCP responses and provides an analysis.

MCP Advantage for RetailCorp: The AI assistant easily combines data from diverse internal systems. Adding a new data source (e.g., supplier shipment tracking) involves creating a new MCP Server for it, which the assistant can then query seamlessly.

It's important to understand that MCP:

Is Not a Full Security Solution: MCP standardizes communication. Robust security layers (authentication, authorization, data encryption) must still be implemented around MCP components and the data they handle.

Doesn't Replace AI Logic (like RAG): If an AI agent uses Retrieval Augmented Generation (RAG) to find information, MCP can standardize how the RAG system (as an MCP tool) accesses its knowledge sources. MCP itself is not the RAG algorithm.

Is a Protocol, Not an Entire Platform: MCP is a foundational building block. Enterprises will typically leverage AI platforms that support or incorporate MCP to effectively develop, deploy, manage, govern, and monitor their AI agents and MCP-enabled services.

Adopting MCP, or similar standardization efforts, offers tangible benefits applicable across all industries:

Faster Time-to-Market for AI Solutions: Simplified integration dramatically accelerates the development and deployment of new AI capabilities.

Reduced Development & Maintenance Costs: Less custom integration code translates to lower initial expenses and ongoing maintenance efforts.

Increased Business Agility: Makes it easier for enterprises to upgrade or swap out backend systems without requiring extensive reprogramming of all connected AI agents.

Enhanced Operational Efficiency & Innovation: AI agents can access a broader range of data and tools more reliably, leading to more sophisticated capabilities, better insights, and more effective automation.

Foundation for Better Governance & Control: Standardized interaction points can make it easier to implement consistent security policies, monitor data access by AI agents, and conduct audits, contributing to overall AI governance.

The Model Context Protocol (MCP) represents a crucial step toward making sophisticated AI agents more practical, scalable, and manageable for enterprises in any sector. By standardizing how these agents connect to the vast and varied ecosystem of tools and data they require, MCP helps reduce complexity, foster interoperability, and accelerate innovation. It is a key enabler for organizations looking to build truly intelligent, integrated, and impactful AI solutions.