AI Security: Expert Insights on Integration Risks

Enterprise AI adoption has reached a critical juncture. While organizations race to implement artificial intelligence solutions, security breaches targeting AI systems have increased by 300% in the past year alone. The challenge isn't just about deploying AI—it's about doing so securely without compromising operational integrity or exposing sensitive data.

Understanding ai integration security considerations has become essential for IT leaders navigating the complex landscape of AI implementation. This comprehensive guide provides expert insights into the unique security challenges of AI systems and practical strategies for safe, secure AI integration across enterprise environments.

AI systems introduce fundamentally different security challenges compared to traditional software applications. Unlike conventional cybersecurity that focuses primarily on code vulnerabilities and network protection, AI security must address data-driven threats that can manipulate the learning process itself.

Traditional security measures often fall short when protecting AI systems because machine learning models create new attack surfaces. These include training data manipulation, model extraction attempts, and adversarial inputs designed to fool AI decision-making processes. The dynamic nature of AI systems, which continuously learn and adapt, requires security approaches that can evolve alongside the technology.

Current threat intelligence reveals that AI integration risks are escalating rapidly. Organizations report an average of 15 AI-specific security incidents per quarter, with data poisoning attacks representing 40% of all AI-related breaches. The regulatory environment has responded accordingly, with frameworks like the NIST AI Risk Management Framework providing guidance for secure AI deployment.

The financial impact of AI security failures extends beyond immediate breach costs. Organizations face regulatory penalties, intellectual property theft, and long-term reputation damage when AI systems are compromised.

Data poisoning represents one of the most significant security considerations for AI systems. Attackers inject malicious data into training datasets, causing models to learn incorrect patterns or behaviors. This type of attack is particularly dangerous because it can remain undetected for extended periods while gradually degrading system performance.

AI data protection challenges also include privacy concerns where sensitive information inadvertently appears in AI outputs. Models trained on personal data may reveal confidential information through their responses, creating compliance violations and privacy breaches.

Adversarial attacks exploit the mathematical foundations of machine learning algorithms. By making subtle modifications to input data—often imperceptible to humans—attackers can cause AI systems to make incorrect predictions or classifications. These attacks are particularly concerning in critical applications like autonomous vehicles or medical diagnosis systems.

Model theft represents another significant vulnerability where attackers attempt to reverse-engineer proprietary AI models through carefully crafted queries. This intellectual property theft can undermine competitive advantages and expose sensitive algorithmic approaches.

Expert Insight

Organizations implementing AI without proper security frameworks experience 60% more security incidents than those following structured AI security protocols. The key is building security into the AI development lifecycle from day one, not retrofitting it later.

When integrating AI into existing enterprise systems, compatibility issues often create security gaps. Legacy infrastructure may lack the security controls necessary to protect AI workloads, while rapid AI deployment can bypass established security review processes.

API security becomes critical when AI services communicate with other systems. Unsecured endpoints can expose AI models to unauthorized access or manipulation, while inadequate authentication mechanisms may allow unauthorized users to access sensitive AI capabilities.

Implementing differential privacy techniques helps protect individual data points while maintaining the utility of AI models. This approach adds mathematical noise to datasets, making it impossible to identify specific individuals while preserving overall data patterns for training purposes.

Data minimization strategies ensure AI systems only access information necessary for their specific functions. This principle reduces the potential impact of data breaches while improving system performance by focusing on relevant data sources.

Regulatory compliance for AI systems requires understanding how existing privacy laws apply to machine learning processes. GDPR's "right to explanation" provisions, for example, demand that AI decision-making processes be transparent and auditable.

Industry-specific regulations add additional complexity. Healthcare AI systems must comply with HIPAA requirements, while financial AI applications face SOX and other regulatory frameworks that govern data handling and algorithmic decision-making.

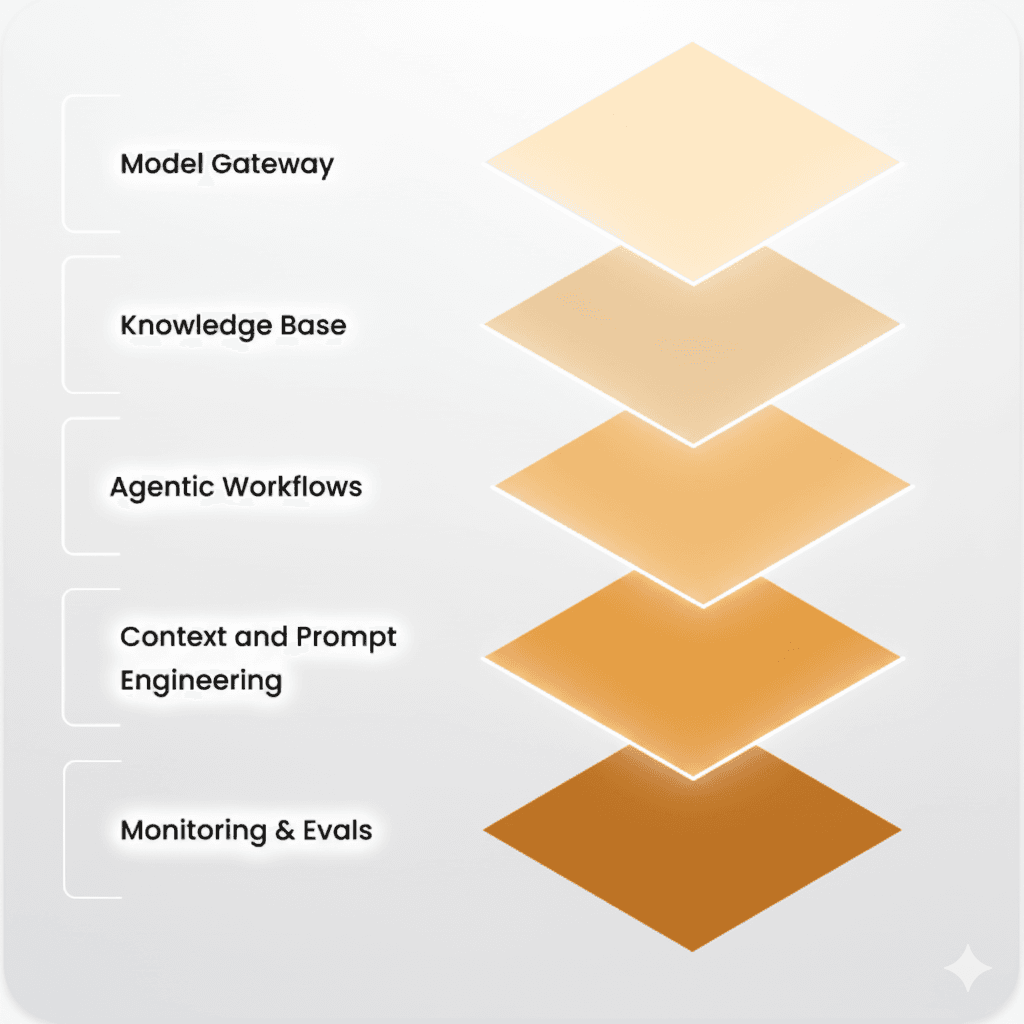

Prompt engineering security has emerged as a critical discipline as organizations deploy large language models and conversational AI systems. Prompt injection attacks represent a new class of vulnerability where malicious users craft inputs designed to override system instructions or extract sensitive information.

Direct prompt injection occurs when users include malicious instructions within their queries, attempting to override the AI system's intended behavior. Indirect prompt injection is more sophisticated, using external content sources to influence AI responses without the user's knowledge.

Prevention strategies include input validation, prompt sanitization, and implementing robust content filtering mechanisms that can identify and block potentially malicious instructions before they reach the AI model.

Effective prompt security requires implementing role-based access controls that limit user interactions based on their authorization levels. This approach ensures sensitive AI capabilities remain accessible only to authorized personnel while maintaining usability for general users.

Prompt versioning and audit trails provide visibility into how AI systems are being used and help identify potential security issues before they escalate into serious breaches.

AI threat modeling requires adapting traditional security frameworks to address the unique characteristics of machine learning systems. The STRIDE methodology can be extended to cover AI-specific threats like model inversion attacks and membership inference attacks.

Comprehensive risk assessment must consider the entire AI lifecycle, from data collection and model training through deployment and ongoing operations. This holistic approach ensures security measures address vulnerabilities at every stage of AI system development and deployment.

Penetration testing for AI applications requires specialized techniques that go beyond traditional security testing. Red team exercises should include attempts to poison training data, execute adversarial attacks, and exploit AI-specific vulnerabilities.

Continuous vulnerability assessment becomes particularly important for AI systems because their behavior can change as they process new data or receive model updates.

.jpg&w=3840&q=75)

Secure AI implementation demands integrating security considerations throughout the development lifecycle. DevSecOps practices must be adapted to include AI-specific security testing, model validation, and deployment verification procedures.

Infrastructure security for AI workloads requires specialized configurations that account for the computational requirements and data flows of machine learning systems. Container security becomes particularly important as organizations deploy AI models in Kubernetes environments.

AI systems require specialized monitoring capabilities that can detect anomalous behavior patterns indicating potential security incidents. Traditional security monitoring tools may miss AI-specific attacks that don't trigger conventional security alerts.

Incident response playbooks must be adapted to address AI-specific scenarios, including procedures for model rollback, data contamination assessment, and adversarial attack mitigation.

The most critical considerations include data privacy protection, preventing data poisoning attacks, implementing robust access controls, securing AI model endpoints, and ensuring compliance with relevant regulations. Organizations must also address prompt injection vulnerabilities and establish comprehensive monitoring for AI-specific threats.

Protection strategies include implementing differential privacy techniques, establishing strong data governance frameworks, encrypting sensitive datasets, maintaining comprehensive access controls, and conducting regular security audits. Organizations should also implement data minimization practices and ensure proper anonymization of training data.

Key challenges include compatibility issues between AI systems and legacy security tools, the complexity of monitoring AI-specific threats, scalability concerns when protecting large-scale AI deployments, and gaps in traditional security frameworks that don't address AI vulnerabilities. Organizations often struggle with the specialized expertise required for AI security.

Companies should develop comprehensive AI governance frameworks that align with existing regulatory requirements, maintain detailed audit trails for AI decision-making processes, implement transparent AI operations, and ensure regular compliance assessments. This includes following guidelines from frameworks like NIST AI RMF and industry-specific regulations.

Prompt engineering is critical for preventing prompt injection attacks, ensuring secure interactions with AI systems, maintaining output integrity, and controlling AI behavior. Proper prompt security includes input validation, role-based access controls, and comprehensive monitoring of AI interactions to detect potential security threats.

The landscape of AI integration security considerations continues to evolve as organizations balance innovation with protection. Success requires a proactive approach that builds security into AI systems from the ground up rather than treating it as an afterthought. By implementing comprehensive security frameworks, organizations can harness the transformative power of AI while maintaining the trust and protection their stakeholders demand. The future of enterprise AI depends on getting security right from the start, ensuring that AI-driven innovation proceeds safely and sustainably.