Transforming AI Development with Full-Stack Solutions

-b20aa640-2d7f-400f-93f5-5c5ed375fce6.webp&w=3840&q=75)

-b20aa640-2d7f-400f-93f5-5c5ed375fce6.webp&w=3840&q=75)

Enterprise AI development has reached a critical inflection point. Organizations worldwide recognize the transformative potential of artificial intelligence, yet many struggle with the complexity of building robust AI solutions. The challenge lies not in understanding AI's value, but in navigating the fragmented landscape of tools, platforms, and technologies required to bring AI applications from concept to production.

This comprehensive guide explores how full-stack AI platforms are revolutionizing enterprise AI development by providing integrated, end-to-end solutions that eliminate complexity while maintaining security and control. You'll discover the essential components of modern AI development platforms, implementation best practices, and strategic considerations for selecting the right solution for your organization.

A full-stack AI platform represents a fundamental shift from traditional, fragmented AI tooling to comprehensive, integrated development environments. Unlike point solutions that address specific aspects of AI development, these platforms provide a unified ecosystem that spans the entire AI application lifecycle.

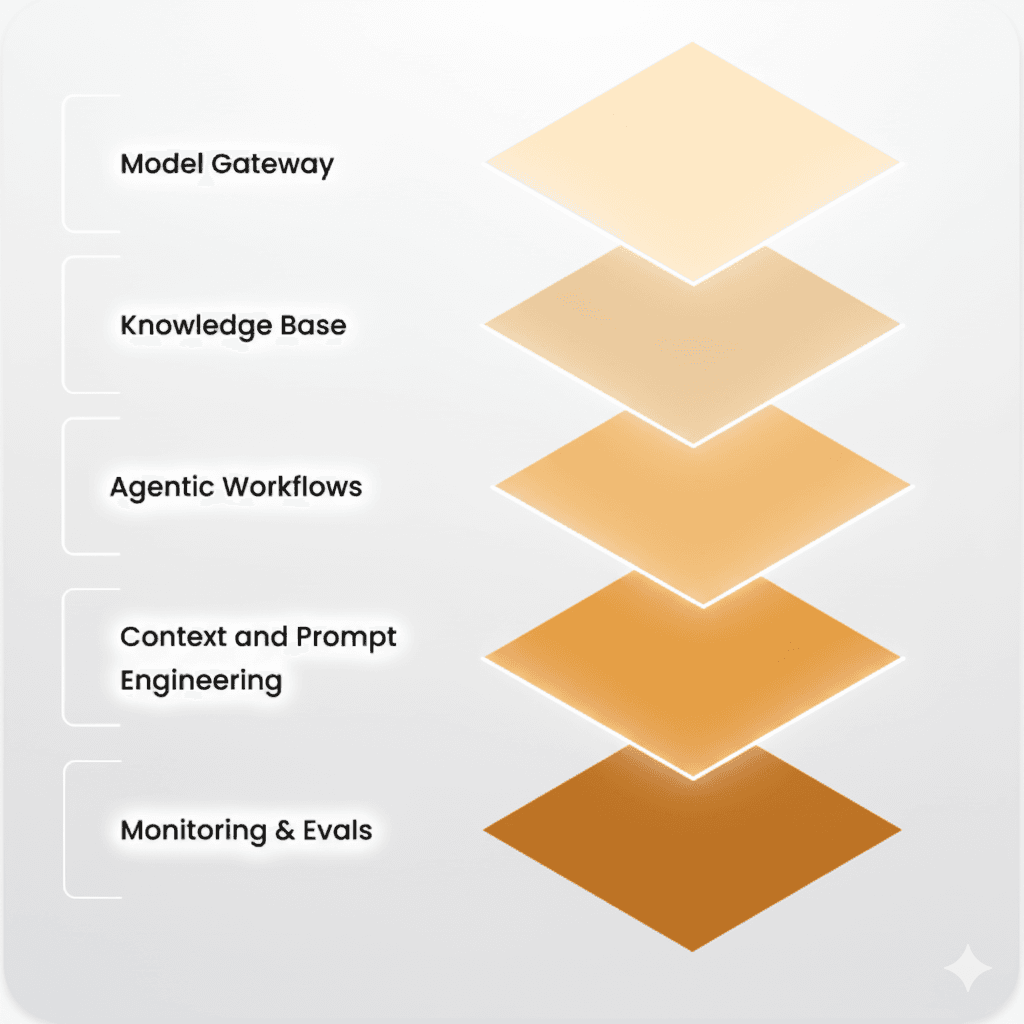

Modern AI platforms integrate multiple layers of functionality into a cohesive whole. The data management layer handles ingestion, preprocessing, and quality assurance across diverse data sources. The model development layer provides tools for training, validation, and experimentation, supporting both custom models and automated machine learning workflows.

The deployment infrastructure layer ensures seamless transition from development to production, while the monitoring and governance layer maintains performance, security, and compliance throughout the application lifecycle. This integrated approach eliminates the complexity of managing multiple vendor relationships and tool integrations.

Traditional AI development often requires organizations to stitch together dozens of specialized tools. Data scientists might use one platform for model training, another for data preparation, and yet another for deployment. This fragmented approach creates integration challenges, increases maintenance overhead, and slows development cycles.

Full-stack AI platforms address these challenges by providing pre-integrated components that work seamlessly together. This unified approach reduces technical debt, accelerates time-to-market, and enables teams to focus on solving business problems rather than managing infrastructure complexity.

Enterprise-grade AI development platforms must provide comprehensive capabilities across multiple domains to support diverse use cases and organizational requirements.

Effective data management forms the foundation of successful AI applications. Modern platforms provide automated data ingestion from multiple sources, including databases, APIs, and real-time streams. Built-in data quality monitoring ensures consistency and reliability, while preprocessing capabilities handle transformation, cleaning, and feature engineering tasks.

Real-time data streaming capabilities enable applications that require immediate responses to changing conditions. This infrastructure supports both batch processing for training and inference workflows and streaming processing for real-time applications.

Comprehensive machine learning platforms within full-stack solutions support the complete model development lifecycle. Teams can leverage automated machine learning capabilities for rapid prototyping while maintaining the flexibility to develop custom models when business requirements demand specialized approaches.

Model training infrastructure provides scalable compute resources that automatically adjust based on workload requirements. Version control and experiment tracking ensure reproducibility and enable teams to compare different approaches systematically.

As generative AI becomes central to enterprise applications, prompt engineering tools have emerged as critical platform components. These tools provide template management systems that enable teams to create, version, and optimize prompts systematically.

Advanced platforms include A/B testing capabilities for prompt performance, allowing teams to optimize outputs based on real-world usage patterns. Integration with large language models enables rapid prototyping and deployment of conversational AI applications.

Expert Insight

Organizations that adopt integrated full-stack AI platforms report 40% faster time-to-market for AI applications compared to those using fragmented toolchains, primarily due to reduced integration overhead and streamlined workflows.

Production deployment capabilities distinguish enterprise platforms from development-focused tools. AI model deployment infrastructure provides containerization and orchestration capabilities that ensure consistent performance across different environments.

Automatic scaling and load balancing handle varying demand patterns without manual intervention. Blue-green deployment strategies enable zero-downtime updates, while rollback capabilities provide safety nets for production changes.

Enterprise AI solutions require comprehensive monitoring and governance capabilities to maintain performance and compliance standards. Real-time performance tracking identifies model drift and degradation before they impact business outcomes.

Built-in compliance frameworks address regulatory requirements across different industries and regions. Audit trails provide complete visibility into model development, deployment, and usage patterns, supporting both internal governance and external compliance requirements.

Generative AI platforms represent a specialized subset of full-stack solutions focused on large language models and multi-modal AI applications. These platforms provide fine-tuning capabilities that enable organizations to adapt pre-trained models to specific domains and use cases.

Multi-modal support extends beyond text generation to include image, audio, and video processing capabilities. Custom model hosting and API management enable organizations to deploy proprietary models while maintaining security and control over intellectual property.

Integration with existing enterprise systems ensures that generative AI capabilities enhance rather than replace existing workflows. This approach maximizes return on investment while minimizing disruption to established business processes.

Full-stack AI platforms deliver measurable benefits across multiple dimensions of enterprise operations, from development efficiency to cost optimization and risk management.

Integrated platforms eliminate the time and effort required to integrate multiple tools and manage complex dependencies. Pre-built connectors and standardized APIs enable rapid prototyping and iteration, reducing time-to-market for new AI applications.

Automated workflows handle routine tasks like data preprocessing, model validation, and deployment preparation. This automation frees data science teams to focus on high-value activities like feature engineering and model optimization.

Consolidated tooling reduces licensing costs and eliminates redundant infrastructure investments. Shared compute resources and automatic scaling optimize resource utilization, reducing overall infrastructure costs compared to maintaining separate systems for different AI workloads.

Reduced maintenance overhead translates to lower operational costs and improved team productivity. Organizations can reallocate resources from infrastructure management to innovation and business value creation.

Unified workspaces enable seamless collaboration between data scientists, engineers, and business stakeholders. Shared project repositories and standardized workflows ensure knowledge transfer and reduce dependency on individual team members.

Version control and documentation capabilities support collaborative development while maintaining audit trails for compliance and governance requirements.

Successful AI application development requires a structured approach that addresses technical, business, and operational considerations throughout the development lifecycle.

Effective AI projects begin with clear requirements gathering and use case definition. Teams must identify specific business problems, success metrics, and technical constraints before selecting appropriate technologies and approaches.

Architecture decisions made during planning significantly impact long-term success. Full-stack platforms provide flexibility to adapt architectural choices as requirements evolve while maintaining consistency across the development stack.

The development phase leverages platform capabilities for data preparation, feature engineering, and model training. Integrated tools streamline these processes while maintaining flexibility for custom approaches when standard methods prove insufficient.

Hyperparameter tuning and model selection benefit from automated optimization capabilities, while integration with existing systems ensures that new AI capabilities enhance rather than disrupt established workflows.

Comprehensive testing frameworks validate both technical performance and business impact. Performance benchmarking ensures that models meet accuracy and latency requirements, while user acceptance testing validates that applications address real business needs.

Continuous feedback loops enable iterative improvement based on real-world usage patterns and changing business requirements.

Production deployment strategies must balance speed and safety. Phased rollouts enable teams to validate performance in production environments while minimizing risk to critical business processes.

Monitoring and continuous improvement processes ensure that deployed applications maintain performance standards and adapt to changing conditions over time.

Choosing the right AI infrastructure requires careful evaluation of technical capabilities, business requirements, and long-term strategic objectives.

.jpg&w=3840&q=75)

Scalability requirements vary significantly across different use cases and organizational sizes. Platforms must support current workloads while providing clear paths for growth as AI adoption expands throughout the organization.

Integration capabilities determine how effectively new AI applications can enhance existing business processes. Native connectors and standardized APIs reduce implementation complexity and ongoing maintenance requirements.

Total cost of ownership analysis must consider both direct platform costs and indirect expenses like training, support, and ongoing maintenance. Transparent pricing models enable accurate budgeting and cost forecasting.

Vendor lock-in risks require careful evaluation of data portability, API standards, and migration capabilities. Organizations should maintain flexibility to adapt their technology stack as requirements evolve.

Enterprise security requirements demand comprehensive data protection, access controls, and audit capabilities. Industry-specific compliance requirements may impose additional constraints on platform selection and deployment approaches.

Built-in governance frameworks reduce the complexity of maintaining compliance while enabling innovation and experimentation within appropriate boundaries.

A full-stack AI platform integrates all components of the AI development lifecycle into a unified environment, eliminating the complexity of managing multiple disparate tools while providing seamless workflows from data preparation to production deployment.

Implementation timelines typically range from 3-6 months for initial deployment, with full organizational adoption occurring within 6-12 months depending on complexity and scope of use cases.

Key cost factors include platform licensing, infrastructure resources, training and support services, integration efforts, and ongoing maintenance and optimization activities.

Modern full-stack AI platforms provide enterprise-grade security features including encryption, access controls, audit trails, and compliance certifications that meet or exceed traditional on-premises security standards.

These platforms typically offer multiple interfaces ranging from no-code visual tools for business users to advanced APIs and custom development environments for experienced data scientists and engineers.

The transformation of AI development through full-stack solutions represents a fundamental shift toward more efficient, secure, and scalable approaches to enterprise AI adoption. Organizations that embrace integrated platforms position themselves to accelerate innovation while maintaining the control and security essential for enterprise success. The key lies in selecting solutions that align with both current requirements and long-term strategic objectives, ensuring that AI investments deliver sustainable competitive advantages.

As the AI landscape continues to evolve, the organizations that thrive will be those that can rapidly adapt and scale their AI capabilities while maintaining operational excellence. Full-stack AI platforms provide the foundation for this transformation, enabling enterprises to focus on creating value rather than managing complexity.